Protection against Adversarial Attacks

Remedies for Machine Learning Systems

The application of machine learning systems in the field of object recognition and object classification has significantly improved in the past few years. These developments led to the use of these systems in numerous areas, e.g. in autonomous vehicles to detect traffic signs. At the same time however, computer science has described methods to attack such systems by referring to so-called "adversarial attacks".

Such "attacks" are carried out by using "adversarial examples", which aim at misdirecting classification results. For this purpose, specific signals are deliberately manipulated so that an object (e.g. a road sign) is incorrectly recognized. These manipulations – which, among others, have been described in image, text, and speech recognition – in most cases are not apparent to the human eye. In order to carry out an attack, the attacker may for example subtly alter pixels of an image or insert a barely perceptible image noise. The system subsequently assigns the imaged objects incorrectly. In addition to these two-dimensional edits, attackers may also misdirect systems with manipulated three-dimensional objects. This potentially creates further problems, the effects of which cannot be estimated at present.

In addition to technical approaches to minimize the risks, the question arises as to whether there are any legal instruments that can be successfully used against "adversarial attacks" and if so, which ones. Prof. Alfred Früh and his team research into this from a private law perspective within the framework of this ZLSR project. Taking into consideration the international dimension of this topic, our research goes beyond the national legal framework and covers other – e.g. regulatory – solutions. The ZLSR team is collaborating with international researchers within interdisciplinary workshops.

Publications:

Fausch,I.; Zeyer, D., (2024) “Angriffe auf KI-Systeme” (in German only)

Früh,A; Haux, D.H. (2024) "Technical Countermeasures against Adversarial Attacks on Computational Law"

Früh, A.; Haux, D.H. (2022) "Foundations of Artificial Intelligence and Machine Learning"

Presentations and Events:

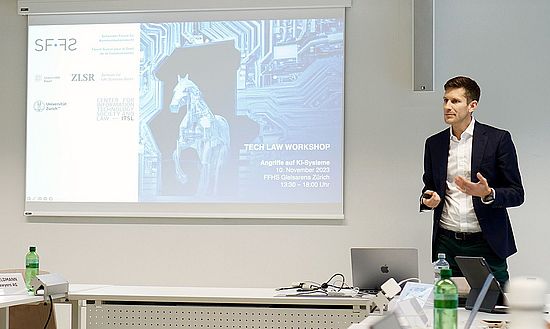

> "Tech Law Workshop - Angriffe auf KI Systeme" organized by Schweizer Forum für Kommunikationsrecht (SF FS) in cooperation with the Center for Life Sciences Law (ZLSR) and the Center for Information Technology and Society and Law (ITSL), FFHS Gleisarena Zürich, Nov. 10, 2023

> Dario Haux, Privatrechtliche Schutzperspektiven für ML-Systeme, Presentation, Swiss Internet Governance Forum (Swiss IGF), BAKOM, Bern, Jun.2, 2022

> Alfred Früh/Dario Haux, Immaterialgüterrechtlicher Schutz vor Adversarial Attacks. Privatrechtliche Schutzperspektiven für Machine Learning Systeme, Presentation, Vertiefung im Immaterialgüter- und Wettbewerbsrecht: Aktuelle Wissenschaft – Werkstattberichte & Diskussion, Lehrstuhl für Zivilrecht X (Prof. Dr. Michael Grünberger, LL.M. (NYU)), Bayreuth (via Zoom), Dec. 8, 2021

Impressions of the workshop